The Low-cost Path to AI Mastery: Building a Wiki Navigator With Pure Similarity Search

The world of Artificial Intelligence (AI) and Large Language Models (LLMs) often conjures images of immense computing power, proprietary platforms, and colossal GPU clusters. This perception can create a high barrier to entry, discouraging curious developers from exploring the fundamentals.

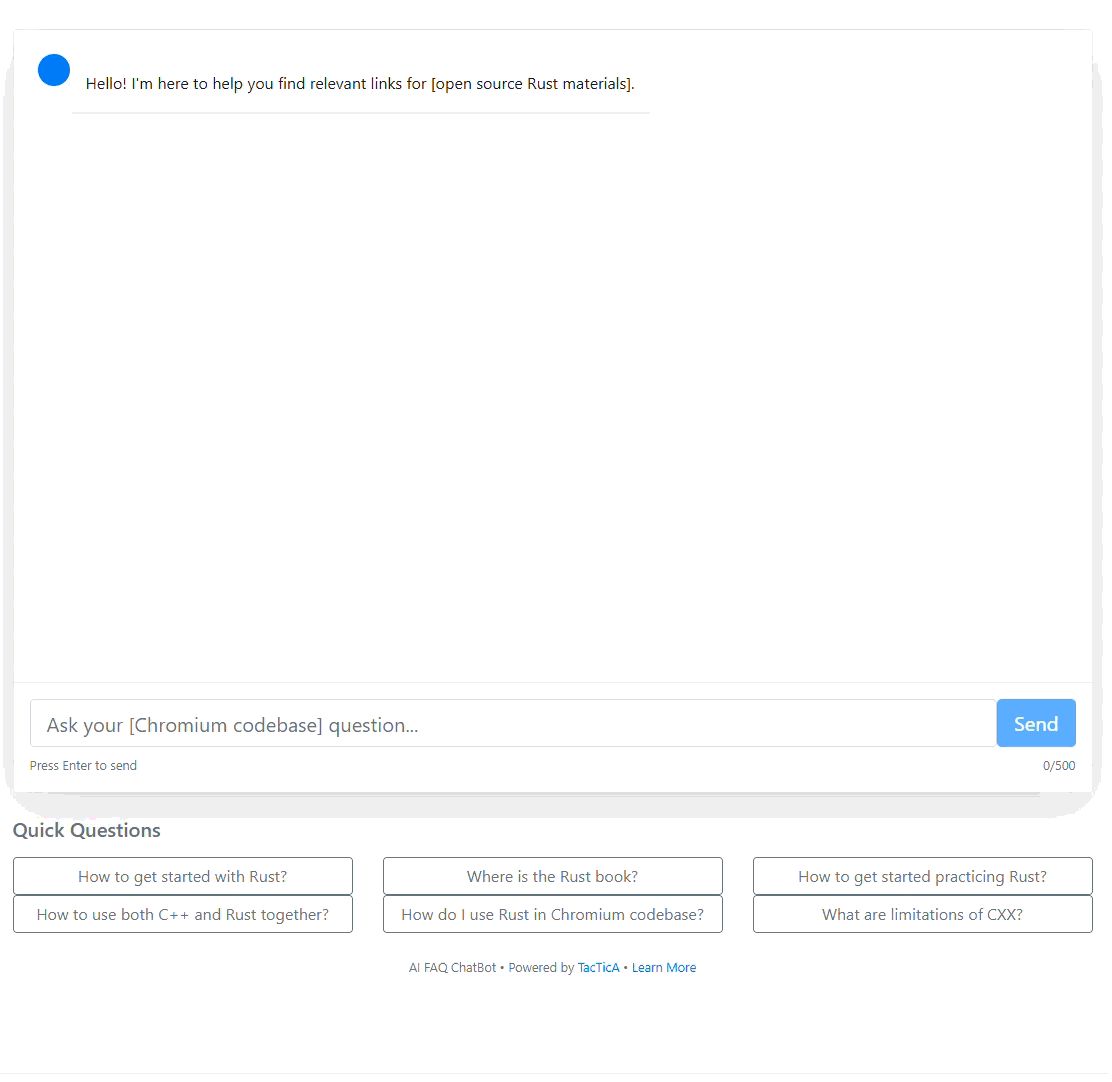

I recently embarked on a project—a sophisticated yet simple AI-powered chatbot I call the Wiki Navigator—that proves this complexity is often unnecessary for learning the essentials. By focusing on core concepts like tokenization, vector embeddings, and cosine similarity, I built a functional RAG (Retrieval Augmented Generation) search solution that operates across 9,000 documents in the Chromium open-source codebase. It took me a few hours to run and next day I was able to re-use the same codebase to train Chat bot on open-source books about the Rust programming language to have useful help during my Rust learning journey.

The main revelation? You don't need to dive too deep with huge GPU cards to learn how the essentials of LLM and AI work. It is a supremely rewarding and practical experience to learn by doing, immediately yielding results without incurring significant expense.

Deconstructing AI: the magic of Vector Embeddings

Our Wiki Navigator functions not by generating novel text, but by reliably retrieving contextual replies and relevant links from source documentation, preventing hallucination by strictly following the links in the wiki. It is essentially a contextual search engine powered by Retrieval Augmented Generation (RAG).

The core concept is surprisingly straightforward:

- Preparation (Training Phase): Convert all your documents (like Q&A pairs and wiki content) into a digital representation known as vector embeddings (watch this great explanation if you didn't yet). This process, which can take an hour or so for large corpora, creates a vector index.

- Querying (Query Phase): When a user submits a question, that query is also converted into a vector embedding.

- Comparison: The system compares the query vector against the document vectors using the Cosine Similarity operation to find the closest matches. If we found two vectors near to each other - that most likely means match in terms of the context (though, as we can see later, not always).

This simple process works effectively for tasks like navigating documentation and finding relevant resources.

Practicality over theory: ensuring algorithmic parity

While many articles focus on the theory of similarity search, the real fun lies in implementing it. Interestingly enough, to run simplistic MVP you take NO AI MODEL, which makes it possible to be deployed statically, running entirely in the browser, making it perfect for hosting on platforms like GitHub Pages. This static deployment requires the training application (C#) and the client application (JavaScript) to share identical algorithms for tokenization and vector calculation, ensuring smooth operation and consistent results.

The training pipeline, which prepares the context database, is built in C# (located in TacTicA.FaqSimilaritySearchBot.Training/Program.cs). During training, data is converted into embeddings using services like the SimpleEmbeddingService (hash-based, in case of NO AI model for static web site deployment), the TfIdfEmbeddingService (TF-IDF/Keyword-Based Similarity - an extended version of trainer), or the sophisticated OnnxEmbeddingService (based on the pre-trained all-MiniLM-L6-v2 transformer model, which would require you to run some good back-end with AI model loaded into RAM).

In this article I mainly focus on the first option - simplistic hash-based approach, while I do also have an AI-Model-based solution running in production, for example, on https://tactica.xyz/#/rust-similarity-search. This is full-fledged React application running all comparisons on the back-end, but the fundamental concepts stay the same.

The core mathematical utilities that define tokenization and vector operations reside in C# within TacTicA.FaqSimilaritySearchBot.Shared/Utils/VectorUtils.cs. To ensure the client-side browser application running in JavaScript via TacTicA.FaqSimilaritySearchBot.Web/js/chatbot.js (or TacTicA.FaqSimilaritySearchBot.WebOnnx/js/chatbot.js for the AI-model based one) can process new user queries identically to C# training algorithm, we must replicate those crucial steps.

It is also critical to make sure that all calcuations produce same outputs in both C# and JavaScript, during both training and running, which might take additional efforts, but still pretty straightforward. For example these two:

From SimpleEmbeddingService.cs:

// This method is taken from chatbot.js to be very similar to let Simple Embedding Service work at all! private Func<double> SeededRandom(double initialSeed) { double seed = initialSeed; return () => { seed = (seed * 9301.0 + 49297.0) % 233280.0; return seed / 233280.0; }; } From chatbot.js:

// Seeded random number generator seededRandom(seed) { return function() { seed = (seed * 9301 + 49297) % 233280; return seed / 233280; }; } C# training example: vector utility

In the C# training application, the VectorUtils class is responsible for calculating cosine similarity, which is the heart of the comparison operation:

// Excerpt from TacTicA.FaqSimilaritySearchBot.Shared/Utils/VectorUtils.cs // This function calculates how 'similar' two vectors (embeddings) are. public static double CalculateCosineSimilarity(float[] vectorA, float[] vectorB) { // [C# Implementation Detail: Normalization and dot product calculation // to determine similarity score between 0.0 and 1.0] // ... actual calculation happens here ... // return similarityScore; } Running training set will take a hour, because we are NOT using GPU's, parallelization or any other fancy staff. Because we are learning the basics and do not want overcomplicate things for now:

JavaScript client example: real-time search

The client application must then perform the same calculation in real time for every user query against the pre-computed index. The system relies on fast in-memory vector search using this very simplistic algorithm.

// Excerpt from TacTicA.FaqSimilaritySearchBot.Web/js/chatbot.js // This function is executed when the user submits a query. function performSimilaritySearch(queryVector, documentIndex) { let bestMatch = null; let maxSimilarity = 0.0; // Convert user query to vector (if using the simple hash/TF-IDF approach) // or use ONNX runtime for transformer model encoding. // Iterate through all pre-calculated document vectors for (const [docId, docVector] of Object.entries(documentIndex)) { // Ensure the JS implementation of Cosine Similarity is identical to C#! const similarity = calculateCosineSimilarity(queryVector, docVector); if (similarity > maxSimilarity) { maxSimilarity = similarity; bestMatch = docId; } } // Apply the configured threshold (default 0.90) for FAQ matching. if (maxSimilarity >= CONFIG.SimilarityThreshold) { // [Action: Return FAQ Response with Citation-Based Responses] } else { // [Action: Trigger RAG Fallback for Full Document Corpus Search] } return bestMatch; } By ensuring that the underlying vector utilities are functionally identical in both C# and JavaScript, we guarantee that the query result will be consistent, regardless of whether the embedding was calculated during the training phase or the real-time query phase.

As you can see, it doesn’t take long to have a running app.

Beyond the Simple Lookup

Our bot is far more sophisticated than a simple keyword search. It is engineered with a three-phase architecture to handle complex queries:

- Phase 1: Context Database Preparation. This is the initial training where Q&A pairs and document chunks are converted to vectors and stored in an index.

- Phase 2: User Query Processing. When a query is received, the system first attempts Smart FAQ Matching using the configured similarity threshold (default: 0.90). If the confidence score is high, it returns a precise answer.

- Phase 3: General Knowledge Retrieval (RAG Fallback). If the FAQ match confidence is low, the system activates RAG Fallback, searching the full document corpus, performing Top-K retrieval, and generating synthesized answers with source attribution.

This sophisticated fallback mechanism ensures that every answer is citation-based, providing sources and confidence scores. Depending on the use cases you can switch ON or OFF citations as the quality of response hugely depends on the amount of Questions & Answers pairs you used during training. Low amount of Q&A would make this bot find irrelevant citations more frequently. Thus, if you simply don't have enough Q&A - bot still can be useful by returning valid URL links, but not citations. With good amount of Q&A you can notice the quality of answers higher and higher.

The nuances of Similarity Search

This hands-on exploration immediately exposes fascinating, practical insights that often remain hidden in theoretical papers.

For instance, comparing approaches side-by-side reveals that the bot can operate both with an AI model (using the transformer-based ONNX embedding) and even without it, leveraging pure hash-based embeddings. While the hash-based approach is simple, the efficacy of embeddings, even theoretically, is limited, as discussed in the paper "On the Theoretical Limitations of Embedding-Based Retrieval".

Furthermore, working directly with cosine similarity illuminates concepts like "Cosine Similarity Abuse"—a fun, practical demonstration of how one can deliberately trick non-intelligent AI systems. This is only scratch of a surface in the bigger "Prompt Injection" problem (example good reading) that truly puts a serious threat for the users of AI and software engineers who builts AI for production use.

Your next AI project starts now

Building a robust, functional bot that handles 9,000 documents across a complex project like Chromium requires technical diligence, but it does not require massive infrastructure. This project proves that the fundamental essentials of LLM and AI—tokenization, vectorization, and similarity comparison—are perfectly accessible to anyone willing to dive into the code.

The Wiki Navigator serves as a powerful demonstration of what is possible with similarity search on your own internal or corporate data.

I encourage you to explore the open-source code and see how quickly you can achieve tangible results:

- Source Code:

https://github.com/tacticaxyz/tactica.faq.similaritysearch - Chromium Demo:

https://tactica.xyz/#/chromium-similarity-search - Rust Demo:

https://tactica.xyz/#/rust-similarity-search

This is just the beginning. Future explorations can dive deeper into topics like advanced vector search techniques, leveraging languages like Rust in AI tooling, and optimizing AI for browser-based applications. Start building today!

You May Also Like

White House meeting could unfreeze the crypto CLARITY Act this week, but crypto rewards likely to be the price

The Future of Telecommunications (2025–2030): 5G, 6G, IoT, AI & Security