RECKONING Method: Bi-Level Optimization for Dynamic Knowledge Encoding and Robust Reasoning

Table of Links

Abstract and 1. Introduction

-

Background

-

Method

-

Experiments

4.1 Multi-hop Reasoning Performance

4.2 Reasoning with Distractors

4.3 Generalization to Real-World knowledge

4.4 Run-time Analysis

4.5 Memorizing Knowledge

-

Related Work

-

Conclusion, Acknowledgements, and References

\ A. Dataset

B. In-context Reasoning with Distractors

C. Implementation Details

D. Adaptive Learning Rate

E. Experiments with Large Language Models

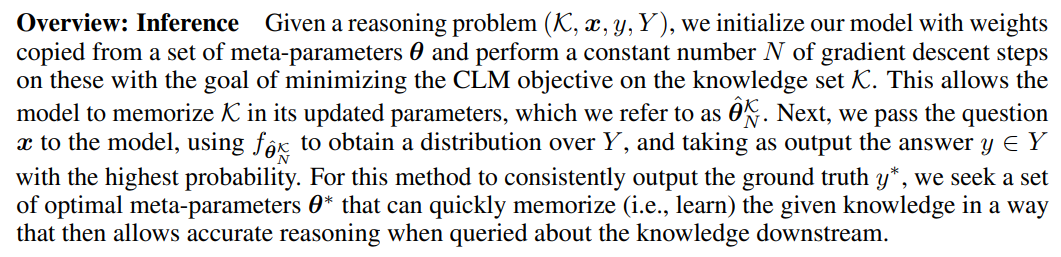

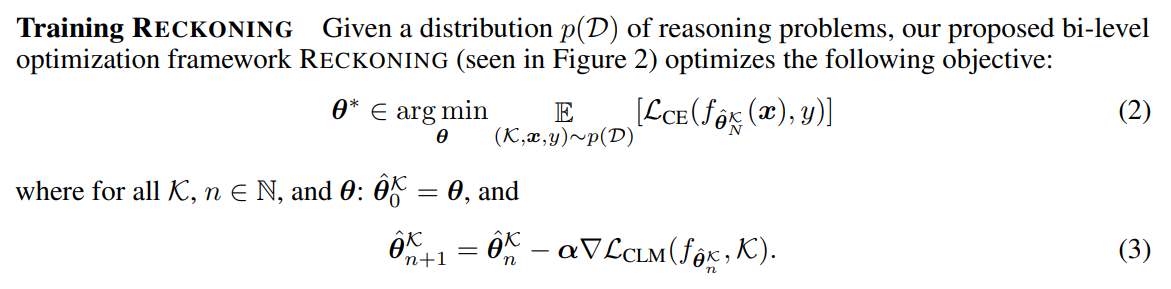

3 Method

Addressing these challenges, we propose RECKONING (REasoning through dynamiC KnOwledge eNcodING), which solves reasoning problems by memorizing the provided contextual knowledge, and then using this encoded knowledge when prompted with downstream questions. Specifically, RECKONING uses bi-level optimization to learn a set of meta-parameters primed to encode relevant knowledge in a limited number of gradient steps. The model can then use its updated weights to solve reasoning problems over this knowledge, without further presentation of the knowledge itself

\

\

\

\

:::info Authors:

(1) Zeming Chen, EPFL ([email protected]);

(2) Gail Weiss, EPFL ([email protected]);

(3) Eric Mitchell, Stanford University ([email protected])';

(4) Asli Celikyilmaz, Meta AI Research ([email protected]);

(5) Antoine Bosselut, EPFL ([email protected]).

:::

:::info This paper is available on arxiv under CC BY 4.0 DEED license.

:::

\

You May Also Like

Tevau Hits 260K Users and Evolves into a Money App

Securely and Easily E file W-2 and 1099 Forms Directly Through Halfpricesoft!