Why Transformers Struggle with Global Reasoning

Table of Links

Abstract and 1. Introduction

1.1 Syllogisms composition

1.2 Hardness of long compositions

1.3 Hardness of global reasoning

1.4 Our contributions

-

Results on the local reasoning barrier

2.1 Defining locality and auto-regressive locality

2.2 Transformers require low locality: formal results

2.3 Agnostic scratchpads cannot break the locality

-

Scratchpads to break the locality

3.1 Educated scratchpad

3.2 Inductive Scratchpads

-

Conclusion, Acknowledgments, and References

A. Further related literature

B. Additional experiments

C. Experiment and implementation details

D. Proof of Theorem 1

E. Comment on Lemma 1

F. Discussion on circuit complexity connections

G. More experiments with ChatGPT

\

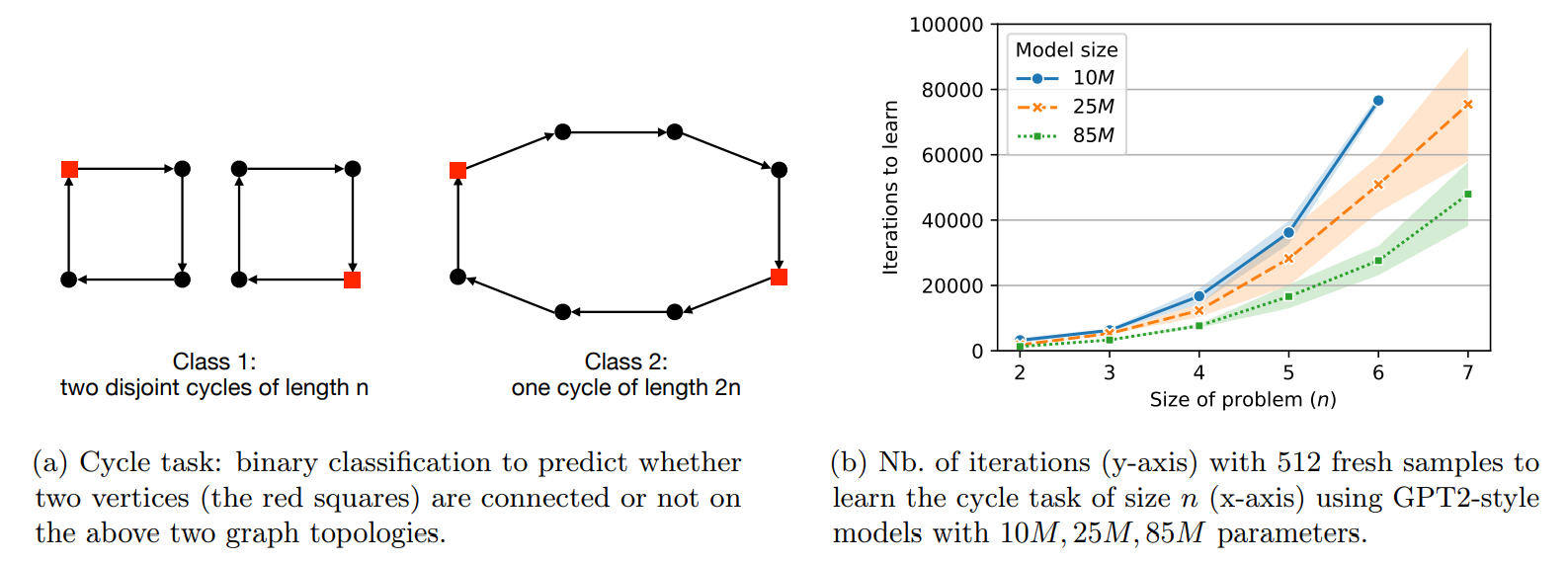

1.3 Hardness of global reasoning

As discussed previously, the cycle task appears to be challenging for Transformers as it requires some global reasoning. Other tasks such as subset parities exhibit the same challenge. However the latter can be proved to be not efficiently learnable by various regular neural networks and noisy gradient descent, as one can get explicitly a class of functions (through orbit arguments [12, 13]) that has large statistical dimension [14] or low cross-predictability [12, 15] (see Appendix A.2). For the cycle task, we have a single distribution, and it is unclear how to use the invariances of Transformers to get arguments as in [12, 13], as the input distribution is not invariant under the symmetries of the model. We thus would like to develop a more general complexity measure that unifies why such tasks are hard for Transformer-like models and that formalizes the notion of ‘local reasoning barrier’ when models are trained from scratch. We also would like to understand how the

\

\ scratchpad methodologies that have proved helpful in various settings (see Section 3) can help here. This raises the questions:

\ (1) How can we formalize the ‘local reasoning barrier’ in general terms?

\ (2) Can we break the ‘local reasoning barrier’ with scratchpad methodologies?

1.4 Our contributions

We provide the following contributions:

– A general conjecture (Conjecture 1), backed by experimental results, that claims efficient weak learning is achievable by a regular Transformer if and only if the distribution locality is constant.

\ – A theorem (Theorem 1) that proves the negative side of the above conjecture, the locality barrier, in the instance of a variant of the cycle task under certain technical assumptions. (The cycle task is also put forward in the paper as a simple benchmark to test the global reasoning capabilities of models.)

\ • We then switch to the use of ‘scratchpads’ to help with the locality barrier:

\ – Agnostic scratchpad: we extend Theorem 1 to cases where a polynomial-size scratchpad is used by the Transformer, without any supervision of the scratchpad. I.e., the scratchpad gives additional memory space for the Transformer to compute intermediate steps. This shows that efficient weak learning is still not possible with such an agnostic scratchpad if the locality is non-constant. An educated guess about what to learn in the scratchpad based on some target knowledge is thus required.

\ – Educated scratchpad: we generalize the measure of locality to the ‘autoregressive locality’ to quantify when an educated scratchpad is able to break the locality of a task with subtasks of lower locality. We give experimental results showing that educated scratchpads with constant autoregressive locality allow Transformers to efficiently learn tasks that may originally have high locality. This gives a way to measure how useful a scratchpad can be to break a target into easier sub-targets.

\ – We introduce the notion of inductive scratchpad, a type of educated scratchpad that exploits ‘induction’ compared to a fully educated scratchpad. We show that when the target admits an inductive decomposition, such as for the cycle, arithmetic, or parity tasks, the inductive scratchpad both breaks the locality and improves the OOD generalization in contrast to fully educated scratchpads. This gives significant length generalization on additions (from 10 to 20 or from 4 to 26 depending on the method) and parities (from 30 to 50-55). For instance, using different methods, [17] can length generalize from 10 to 13 digits for additions, and [11] can get roughly 10 extra bits for parities with moderate accuracy.

\

:::info Authors:

(1) Emmanuel Abbe, Apple and EPFL;

(2) Samy Bengio, Apple;

(3) Aryo Lotf, EPFL;

(4) Colin Sandon, EPFL;

(5) Omid Saremi, Apple.

:::

:::info This paper is available on arxiv under CC BY 4.0 license.

:::

[1] Answering ‘yes/1’ if the syllogism can be obtained by composing input ones or ‘cannot tell/0’ otherwise.

\ [2] At the time of the experiments, ChatGPT was in particular not successful at these two tasks.

You May Also Like

Fed Decides On Interest Rates Today—Here’s What To Watch For

Will XRP Price Increase In September 2025?