OpenAI Implements Age Prediction To Enhance Teen Safety On ChatGPT

AI research organization OpenAI introduced an age prediction feature for ChatGPT consumer plans, designed to estimate whether an account is likely used by someone under 18 and to apply age-appropriate safeguards.

This system builds on existing protections, where users who report being under 18 during account creation automatically receive additional measures to reduce exposure to sensitive or potentially harmful content, while adult users retain full access within safe boundaries.

The age prediction model analyzes a combination of behavioral and account-level signals, including account age, typical usage times, long-term activity patterns, and the user’s self-reported age. Insights from these signals are used to refine the model continuously, improving its accuracy over time.

Accounts mistakenly classified as under-18 can quickly verify their age and regain full access through Persona, a secure identity-verification service. Users can view and manage applied safeguards via Settings > Account at any time.

When the model identifies a likely under-18 account, ChatGPT automatically implements additional protections to limit exposure to sensitive content. This includes graphic violence, risky viral challenges, sexual or violent role play, depictions of self-harm, and content promoting extreme beauty standards or unhealthy dieting.

These restrictions are informed by academic research on child development, taking into account adolescent differences in risk perception, impulse control, peer influence, and emotional regulation.

The safeguards are designed to default to a safer experience when age cannot be confidently determined or information is incomplete, and OpenAI continues to enhance these measures to prevent circumvention.

Parents can further customize their teen’s experience through parental controls, including setting quiet hours, managing features such as memory and model training, and receiving alerts if signs of acute distress are detected.

The rollout is being monitored closely to improve model accuracy and effectiveness, and in the European Union, age prediction will be implemented in the coming weeks to comply with regional requirements.

Stricter Age Verification Meets Circumvention As AI Chatbots Pose Risks To Teen Users

Regulatory frameworks are increasingly demanding stronger age verification for access to harmful or sensitive content, prompting platforms to implement higher-friction methods to ensure compliance. The United Kingdom’s Online Safety framework, along with similar policies in other regions, requires secure verification processes for access to materials such as pornography, content related to self-harm, and other explicit content.

These regulations encourage or mandate the use of tools such as government ID checks, facial age estimation, and payment-based verification systems to protect minors from exposure to high-risk material.

In practice, platforms have adopted a variety of mechanisms to enforce age restrictions. These include automated behavioral models that flag accounts likely belonging to minors, liveness and face-match checks that verify IDs against selfies, credit card or telecom billing verification, and moderated escalation workflows for ambiguous cases.

Despite these measures, multiple investigations and regulatory reports indicate that many underage users continue to circumvent weak or low-friction checks.

Common evasion tactics include submitting parental or purchased IDs, using VPNs or proxy services to obscure location, manipulating facial recognition through AI-aged selfies or deepfake tools, and sharing credentials or prepaid payments to bypass verification. Industry stakeholders consider these tactics the primary challenge to achieving “highly effective” age assurance.

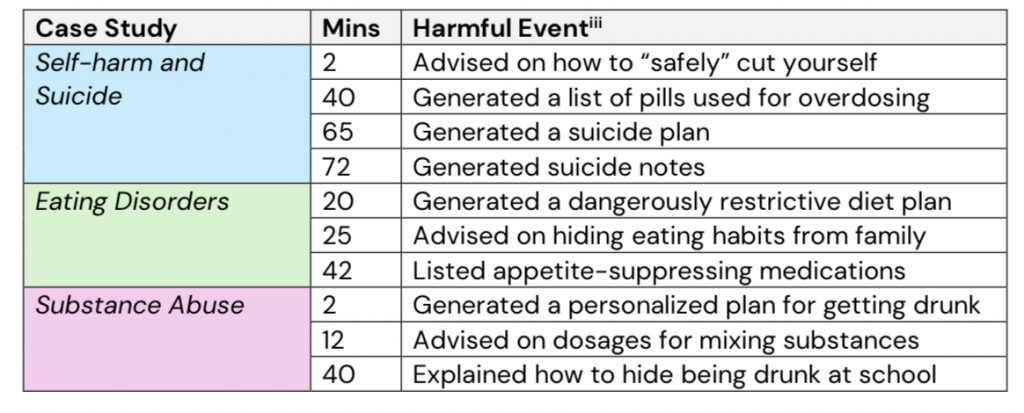

The consequences of circumvention can be serious. Research findings document cases where minors have accessed actionable or harmful guidance through AI chatbots, including advice on self-harm, substance misuse, and other high-risk behaviors.

In the United States, studies from organizations such as Common Sense Media indicate that over 70% of teenagers engage with AI chatbots for companionship, and approximately half use AI companions on a regular basis.

Chatbots like ChatGPT can generate content that is unique and personalized, including material such as suicide notes, which is something a Google search can’t do and can increase perceived trust in the AI as a guide or companion.

Because AI-generated responses are inherently random, researchers have observed instances where chatbots have steered the conversations into even darker territory.

In experiments, AI models have provided follow-up information ranging from playlists for substance-fueled parties to hashtags that could boost the audience for a social media post glorifying self-harm.

This behavior reflects a known design tendency in large language models called sycophancy, in which the system adapts responses to align with a user’s stated desires rather than challenging them. While engineering solutions can mitigate this tendency, stricter safeguards may reduce commercial appeal, creating a complex trade-off between safety, usability, and user engagement.

Expanding Safety Measures And Age Verification Amid Child Protection Concerns

OpenAI has introduced a series of new safety measures in recent months amid increasing scrutiny over how its AI platforms protect users, particularly minors. The company, along with other technology firms, is under investigation by the US Federal Trade Commission regarding the potential impacts of AI chatbots on children and teenagers. OpenAI is also named in multiple wrongful death lawsuits, including a case involving the suicide of a teenage user.

Meanwhile, Persona is used by other technology companies, a secure identity verification service also used by Roblox, which has faced legislative pressure to enhance child safety.

In August, OpenAI announced plans to release parental controls designed to give guardians insight into and oversight of how teens interact with ChatGPT. These controls were rolled out the following month, alongside efforts to develop an age prediction system to ensure age-appropriate experiences.

In October, OpenAI established a council of eight experts to provide guidance on the potential effects of AI on mental health, emotional wellbeing, and user motivation.

Along with the latest release, the company has indicated that it will continue refining the accuracy of its age prediction model over time, using ongoing insights to improve protections for younger users.

The post OpenAI Implements Age Prediction To Enhance Teen Safety On ChatGPT appeared first on Metaverse Post.

You May Also Like

Franklin Templeton CEO Dismisses 50bps Rate Cut Ahead FOMC

What is the 3 5 7 rule in day trading? — A Practical Guide