Ideological Echo Chambers: The Hidden CX Cost of Engagement-Driven AI

When Algorithms Shape Belief: The Hidden CX Cost of Ideological Echo Chambers

Ever noticed how your digital feeds rarely challenge you anymore?

The opinions feel familiar.

The outrage feels predictable.

The certainty feels reassuring.

This is not accidental. It is designed.

AI-driven platforms optimize relentlessly for engagement—clicks, shares, watch time. In doing so, they have quietly become some of the most powerful experience designers of our time. Not just shaping what users see or buy, but how they think, trust, and interpret reality.

This is no longer a peripheral ethics debate.

It is a customer experience failure hiding in plain sight.

Algorithms as Invisible CX Designers

In traditional CX, experience design is deliberate. Journeys are mapped. Touchpoints are audited. Friction is removed thoughtfully.

Algorithmic experiences operate differently.

They are:

- opaque rather than transparent

- self-reinforcing rather than reflective

- optimized for reaction rather than understanding

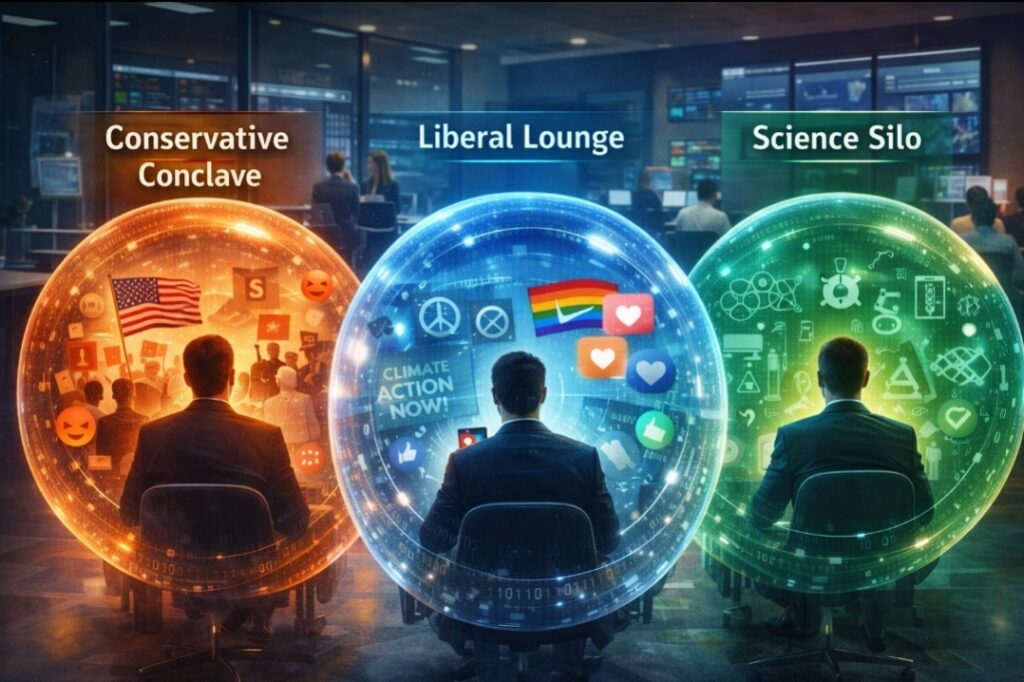

By repeatedly showing users content aligned with their existing beliefs, platforms create ideological comfort zones. These zones feel personalized and relevant. On the surface, they appear to deliver excellent CX.

But over time, they narrow perspective rather than expand it.

From a CX lens, this reveals a dangerous paradox:

When personalization eliminates productive discomfort, experience quality quietly deteriorates.

Engagement Is a Broken CX Proxy

Most digital platforms still reward one dominant metric: attention.

Attention, however, is a blunt instrument. It does not measure:

- trust

- comprehension

- emotional well-being

- long-term relationship value

In fact, engagement-optimized systems often thrive on emotional extremes—anger, fear, outrage, and moral certainty. These emotions increase dwell time but weaken nuance and dialogue.

This is a classic CX optimization failure:

The outcome is experience debt—accumulating invisibly until trust collapses.

Echo Chambers as a CX Anti-Pattern

In experience design, anti-patterns are known solutions that create future harm. Dark patterns manipulate users into actions they may later regret.

Ideological echo chambers function similarly.

They:

- reduce exposure to diverse viewpoints

- reinforce confirmation bias

- reward certainty over curiosity

Over time, users do not merely consume content differently. They experience reality differently.

When people stop encountering thoughtful disagreement, dialogue weakens. Misinformation spreads more easily. Social trust erodes. These outcomes may appear external to CX, but they directly shape how users perceive platforms, institutions, and brands.

Experience does not stop at the interface.

It continues in the mind.

Case Examples: When Engagement Undermines Experience

YouTube and Radicalization Pathways

Multiple independent studies and platform admissions over the years have shown that YouTube’s recommendation system historically favored progressively extreme content because it increased watch time. Users searching for relatively neutral topics were often nudged toward more sensational or polarizing videos.

From a CX standpoint, the issue was not intent but design logic:

- engagement was rewarded

- escalation was efficient

- context was secondary

YouTube later adjusted its algorithms, explicitly acknowledging that watch-time optimization alone was harming user trust and platform credibility.

This was not a technical correction.

It was a CX correction.

Meta (Facebook) and Emotional Amplification

Internal research disclosed during the Facebook Papers revealed that content provoking strong emotional reactions—especially anger—was more likely to spread. While this increased engagement, it also amplified polarization and misinformation.

The CX lesson is stark:

- emotionally intense experiences feel compelling

- but they degrade perceived safety and trust over time

Meta’s subsequent investments in content moderation, integrity teams, and AI transparency initiatives reflect a belated recognition that engagement-heavy CX can still be broken CX.

TikTok and the Speed of Belief Formation

TikTok’s algorithm excels at rapid personalization. Within minutes, users can be funneled into highly specific content clusters. While this creates a powerful sense of relevance, it also accelerates belief formation without reflection.

The CX risk here is velocity:

- users feel understood quickly

- but understanding becomes shallow

- exposure diversity collapses

Fast personalization may feel delightful initially, but long-term experience quality depends on cognitive breadth, not just relevance.

From Customer Journeys to Cognitive Journeys

The next frontier of CX is not only behavioral.

It is cognitive.

AI-driven systems increasingly influence:

- how people interpret events

- which voices they trust

- what they consider normal or credible

Ignoring this influence is no longer neutral. It is a design decision.

CX leaders must now ask:

- What kinds of thinking does our experience encourage?

- What perspectives are systematically excluded?

- What emotional states are consistently rewarded?

These are not philosophical abstractions.

They are experience fundamentals in an AI-mediated world.

Digital Dignity as a CX Imperative

CX has long spoken about empathy. AI requires us to speak about dignity.

Digital dignity means respecting users not merely as content consumers, but as thinking, evolving humans. It challenges experience teams to confront uncomfortable truths:

- Are we optimizing for agency or addiction?

- Are we designing for clarity or confusion?

- Are we supporting critical thinking or undermining it?

Critical thinking cannot be automated—but it can be either supported or eroded by design choices.

Responsible experience design creates space for:

- perspective diversity

- context and nuance

- cognitive breathing room

Not constant emotional escalation.

Why This Matters for CX Leaders Now

The consequences of ignoring ideological echo chambers are no longer abstract:

- declining trust in platforms

- regulatory scrutiny

- brand safety concerns

- user fatigue and disengagement

What appears as a societal problem is increasingly a CX sustainability issue.

Users may not articulate it clearly, but they feel it:

- exhaustion

- cynicism

- distrust

These emotions eventually surface as churn.

Serving Humanity, Not Just Metrics

Technology has no intent.

Experience design does.

If AI-driven platforms continue optimizing primarily for engagement, ideological bubbles will keep tightening. Understanding will keep shrinking. Trust will keep eroding.

But another path exists.

One where responsible technology serves human understanding, not just human attention.

That path begins by recognizing ideological echo chambers not as unavoidable side effects—but as CX failures with societal consequences.

And CX, at its best, exists to identify broken experiences early—before users disengage emotionally, cognitively, or democratically.

In the algorithmic age, the most meaningful customer experience may not be delight.

It may be dignity.

The post Ideological Echo Chambers: The Hidden CX Cost of Engagement-Driven AI appeared first on CX Quest.

You May Also Like

Michael Burry’s Bitcoin Warning: Crypto Crash Could Drag Down Gold and Silver Markets

Michelin-starred dimsum chain Tim Ho Wan doubles HK footprint with 10th store