Why AI Fails Without Data Foundations: Lessons from Building Platforms in Regulated Industries

Aniket Abhishek Soni is a Senior Data Engineer and researcher with more than six years of experience designing and leading large-scale data pipelines, cloud platforms, and AI-enabled systems across highly regulated sectors, including healthcare, financial services, and climate research. As organizations accelerate their use of artificial intelligence, he has seen firsthand how many initiatives fail before reaching production, not because of model limitations but because of weaknesses in the data foundations beneath them.

In this interview with AI Journal, Soni reflects on his early work in academic research and large-scale environmental data pipelines, and how those experiences shaped his approach to building secure, auditable, and resilient enterprise systems. He discusses what real AI readiness looks like at the data layer, why architecture and governance are inseparable from the success of applied AI, and what technical and mindset shifts enterprise leaders must make to build AI systems that are trustworthy, compliant, and capable of delivering long-term value.

1. How did you get started in data engineering and research, and what led you to focus on building large-scale data platforms that support applied AI in regulated industries like healthcare and financial services?

My career began at the intersection of academic research and open-source datasets for data analysis. I started by building ETL pipelines to ingest and standardize large volumes of global weather and geospatial data. That early work in climate research taught me a critical lesson: when the margin for error is small, the reliability of any analysis depends entirely on the strength of the underlying data pipeline.

This perspective naturally carried into regulated sectors such as healthcare and financial services. Whether optimizing portfolio risk analytics or analyzing complex healthcare claims data, the core challenge remains the same: building scalable platforms that are secure, auditable, and privacy-aware by design. My research background, combined with enterprise delivery experience, led me to approach these platforms as distributed systems that must safely support applied AI under real-world constraints.

2. You have years of experience designing enterprise data pipelines and cloud platforms, from your perspective, why do so many AI initiatives fail before they ever reach production?

Many AI initiatives fail because they are built on infrastructure that is not truly AI-ready. Models may perform well in controlled environments, but they quickly break down when exposed to real-world enterprise data, latency issues, schema drift, incomplete records, or ethical data concerns. In these cases, the problem is rarely the model itself; it is the foundation beneath it.

Another common issue is insufficient scrutiny of data. Without standardized ingestion, reconciliatio,n and automated validation, AI systems remain experimental rather than operational. In my experience, reducing ambiguity at the data layer and enforcing disciplined validation can dramatically improve outcomes.

3. Organizations often describe themselves as “AI-ready,” but based on your work at Cognizant, what does real AI readiness actually look like at the data foundation level?

Real AI readiness is defined by the quality and resilience of the data infrastructure. It goes beyond simply having a data lake or cloud platform. An AI-ready foundation is governed, observable, and designed for repeatability, with clear ownership and consistent data models.

In my current role working with enterprise-scale data and AI platforms, I’ve seen that readiness means embedding automated quality checks, metadata, and lineage directly into pipelines. The goal is to provide a trusted source of truth that enables AI models to produce consistent, explainable results across the enterprise. AI readiness is measured by reliability in production, not experimentation in isolation

4. Data quality is frequently blamed for stalled AI projects, but you also emphasize architecture and governance. How do weak design decisions at the data layer undermine even well-built AI models?

If the underlying data model is weak, the resulting AI output will be inherently unreliable. Data sources are the bedrock of any pipeline and poor architectural decisions, such as brittle schemas or tightly coupled transformations, create instability that propagates downstream. Even advanced models cannot compensate for poorly structured or evolving data.

Weak governance further amplifies this risk. Without clear ownership, version control, and lineage, organizations face silent failures where outputs appear correct but are based on stale or corrupted inputs. Architecture and governance determine whether AI systems are transparent and defensible, not just whether they produce predictions.

5. Having worked across highly regulated environments, how should cloud-scale data systems be designed differently to balance performance, compliance, and responsible AI deployment?

In regulated environments, performance cannot come at the expense of compliance or responsibility. Systems must be designed with a privacy-by-design mindset, where access controls, encryption, and auditability are native to the data pipeline rather than added later.

Equally important is recognizing that performance requirements vary by use case. Real-time financial analytics demand different trade-offs than long-running healthcare batch pipelines. Balancing these needs requires cloud-native design choices that allow systems to scale efficiently while maintaining regulatory integrity and operational trust.

6. You have seen both data engineering and AI teams operate up close, what do business and technology leaders most often misunderstand about the relationship between data engineering and applied artificial intelligence?

A common misconception is that AI is meant to replace data engineering or human expertise. In reality, AI is a support capability that depends entirely on strong data engineering foundations. Data engineering provides the structure, consistency, and governance that allow AI systems to function reliably.

Another misunderstanding is that governance slows innovation and is considered a low priority. In practice, well-governed pipelines accelerate AI adoption by reducing failures and rework. When data systems are stable, AI teams can focus on improving models rather than compensating for upstream instability. The two disciplines are inseparable.

7. In sectors like healthcare, finance, and climate research, where the margin for error is small, how does poor data governance increase risk when organizations deploy AI too quickly?

In high-stakes sectors, poor data governance increases the risk of incorrect decisions rather than obvious system failures. If AI models are trained on biased, outdated or incomplete data, even the most advanced systems will fail to achieve reliable performance.

The absence of lineage and traceability is particularly dangerous. When organizations cannot identify which data inputs led to a faulty outcome, remediation becomes nearly impossible. This creates opaque systems that regulated industries cannot afford to trust. Governance provides the transparency necessary for responsible AI deployment.

8. Looking ahead, what mindset shifts and technical investments do enterprise leaders need to make now if they want AI systems that are reliable, governable, and capable of delivering long term value?

Enterprise leaders must shift toward strategic integration, focusing on outcomes rather than effort. AI should not be treated as isolated experimentation, but as a specialized partner capable of supporting complex, multi-step decision workflows.

From a technical perspective, investments in data observability, metadata management, and automated governance are essential. Long-term AI value is built by integrating AI into operational systems rather than deploying it as a standalone tool. Trust, not novelty, ultimately determines the impact of sustainable AI.

You May Also Like

SOL Faces Pressure, DOT Climbs 2.3%, While BullZilla Presale Rockets Past $460K as the Top New Crypto to Join Now

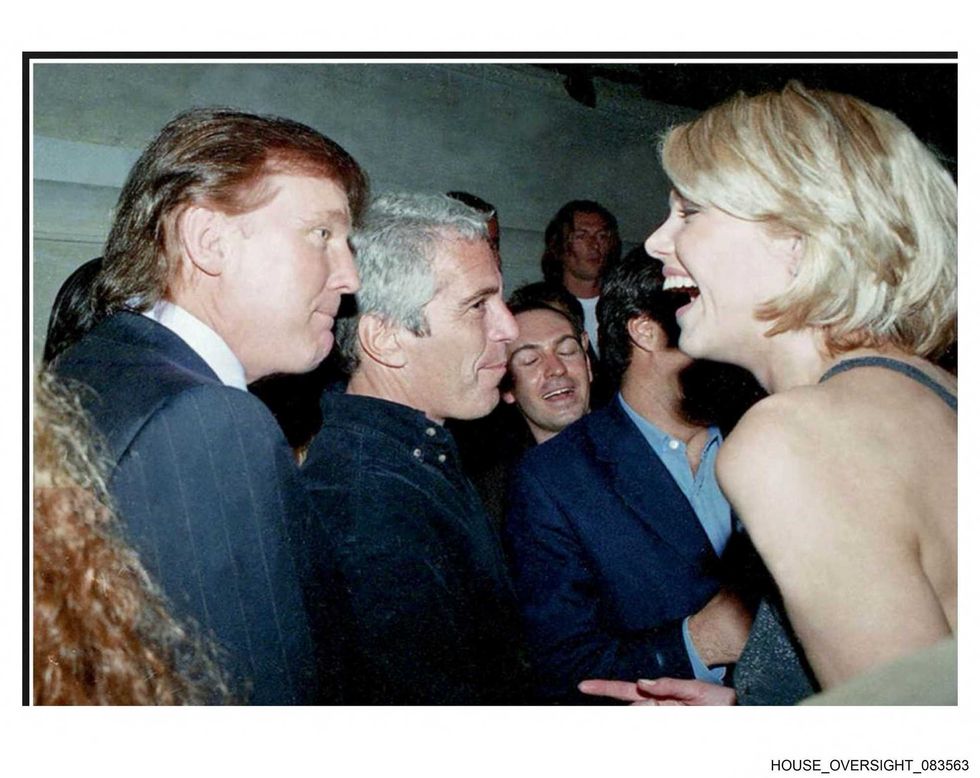

Trump's Epstein confession revealed in newly surfaced FBI files: 'Everyone knows'