From Guesswork to Ground Truth: Making Traffic Forecasts Physically Feasible

:::info Authors:

(1) Laura Zheng, Department of Computer Science, University of Maryland at College Park, MD, U.S.A ([email protected]);

(2) Sanghyun Son, Department of Computer Science, University of Maryland at College Park, MD, U.S.A ([email protected]);

(3) Jing Liang, Department of Computer Science, University of Maryland at College Park, MD, U.S.A ([email protected]);

(4) Xijun Wang, Department of Computer Science, University of Maryland at College Park, MD, U.S.A ([email protected]);

(5) Brian Clipp, Kitware ([email protected]);

(6) Ming C. Lin, Department of Computer Science, University of Maryland at College Park, MD, U.S.A ([email protected]).

:::

Table of Links

Abstract and I. Introduction

II. Related Work

III. Kinematics of Traffic Agents

IV. Methodology

V. Results

VI. Discussion and Conclusion, and References

VII. Appendix

\ Abstract— In trajectory forecasting tasks for traffic, future output trajectories can be computed by advancing the ego vehicle’s state with predicted actions according to a kinematics model. By unrolling predicted trajectories via time integration and models of kinematic dynamics, predicted trajectories should not only be kinematically feasible but also relate uncertainty from one timestep to the next. While current works in probabilistic prediction do incorporate kinematic priors for mean trajectory prediction, variance is often left as a learnable parameter, despite uncertainty in one time step being inextricably tied to uncertainty in the previous time step. In this paper, we show simple and differentiable analytical approximations describing the relationship between variance at one timestep and that at the next with the kinematic bicycle model. In our results, we find that encoding the relationship between variance across timesteps works especially well in unoptimal settings, such as with small or noisy datasets. We observe up to a 50% performance boost in partial dataset settings and up to an 8% performance boost in large-scale learning compared to previous kinematic prediction methods on SOTA trajectory forecasting architectures out-of-the-box, with no fine-tuning.

\

I. INTRODUCTION

Motion forecasting in traffic involves predicting the next several seconds of movement for select actors in a scene, given the context of historical trajectories and the local environment. There are several different approaches to motion forecasting in deep learning, but one thing is in common— scaling resources, dataset sizes, and model sizes will always help generalization capabilities. However, the difficult part of motion forecasting is not necessarily how the vehicles move, but rather, figuring out the why, as there is always a human (at least, for now) behind the steering wheel.

\ As model sizes become bigger and performance increases, so does the complexity of learning the basics of vehicle dynamics, where, most of the time, vehicles travel in relatively straight lines behind the vehicle leading directly in front. Since training a deep neural network is costly, it is beneficial for both resource consumption and model generalization to incorporate the use of existing dynamics models in the training process, combining existing knowledge with powerful modern architectures. With embedded priors describing the relationships between variables across time, perhaps a network’s modeling power can be better allocated to understanding the why’s of human behavior, rather than the how’s.

\

\ Kinematic models have already been widely used in various autonomous driving tasks, especially trajectory forecasting and simulation tasks [1], [2], [3], [4]. These models explicitly describe how changes in the input parameters influence the output of the dynamical system. Typically, kinematic input parameters are often provided by either the robot policy as an action, or by a human in direct interaction with the robot, e.g. steering, throttle, and brake for driving a vehicle. For tasks modeling decision-making, such as trajectory forecasting of traffic agents, modeling the input parameters may be more descriptive and interpretable than modeling the output directly. Moreover, kinematic models relate the input actions directly to the output observation; thus, any output of the kinematic model should, at the very least, be physically feasible in the real world [1].

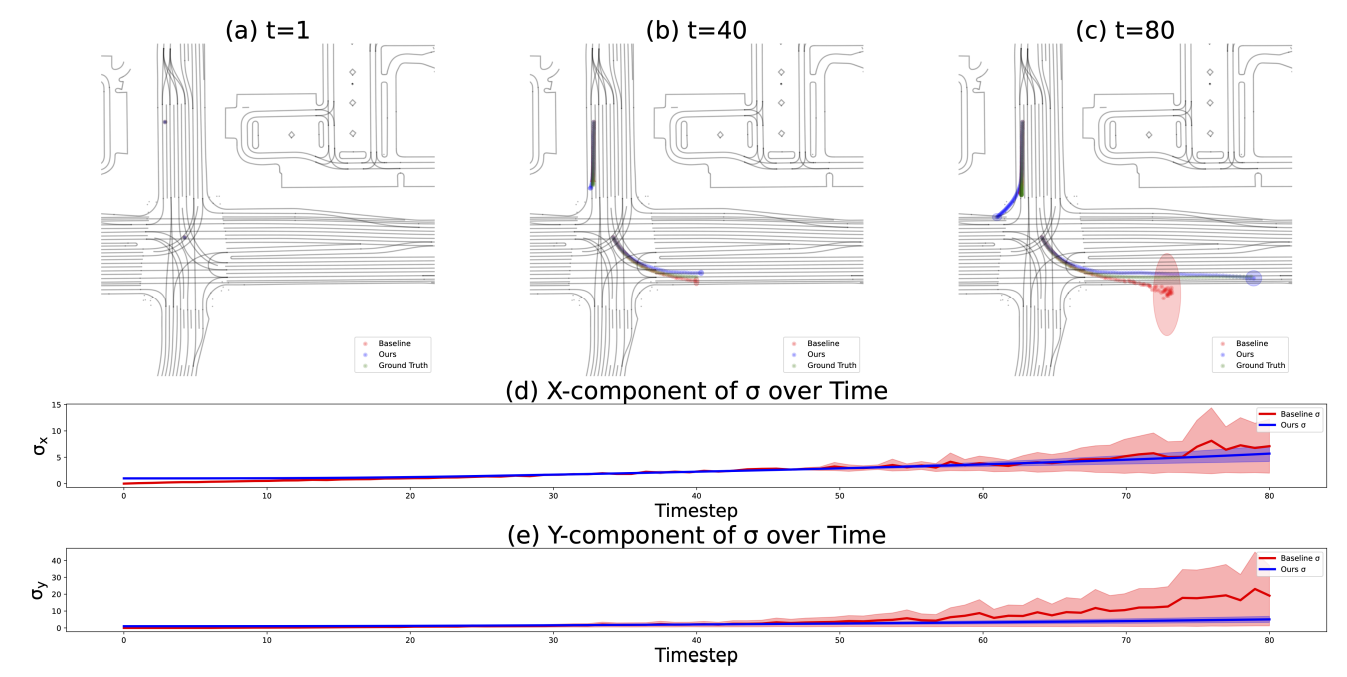

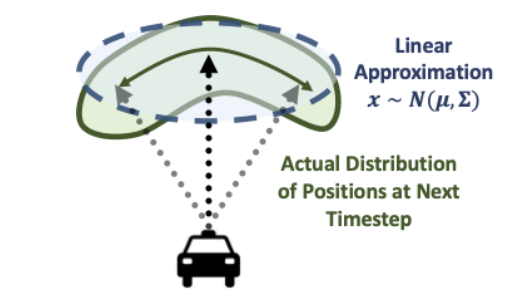

\ While previous works already use kinematic models to provide feasible output trajectories, none consider compounding uncertainty into the time horizon analytically. Previous works apply kinematic models deterministically, either for deterministic trajectory prediction or to predict the average trajectory in probabilistic settings, leaving the variance as a learnable matrix.

\ Kalman filtering is a relevant and classical technique that uses kinematic models for trajectory prediction. Kalman filters excel in time-dependent settings where signals may be contaminated by noise, such as object tracking and motion prediction [5], [6]. Unlike classical Kalman filtering methods

\

\ for motion prediction, however, we focus on models of learnable actions and behaviors, rather than constant models of fixed acceleration and velocity. We believe that, with elements from classical methods such as Kalman filtering in addition to powerful modern deep learning architectures, motion prediction in traffic can have the best of both worlds where modeling capability meets stability.

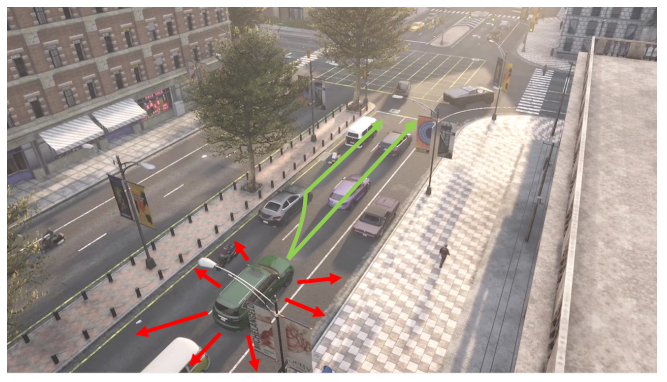

\ In this paper, we go one step further from previous work by considering the relationship between distributions of kinematic parameters to distributions of trajectory rollouts, rather than single deterministic kinematic parameter predictions to the mean of trajectory rollouts [1], [4]. We hypothesize that modeling uncertainty explicitly across timesteps according to a kinematic model will result in better performance, realistic trajectories, and stable learning, especially in disadvantageous settings such as small or noisy datasets, which can be common settings, especially in fine-tuning. We run experiments on four different kinematic formulations with Motion Transformer (MTR) [7] and observe up to a 50% performance boost in partial-dataset settings and up to an 8% performance boost in full dataset settings on mean average precision (mAP) metrics for the Waymo Open Motion Dataset (WOMD) [8] compared to with kinematic priors enforced on mean trajectories only.

\ In summary, the main contributions of this work include:

\

-

A simple and effective method for incorporating analytically-derived kinematic priors into probabilistic models for trajectory forecasting (Section IV), which boosts performance and generalization (Section V) and requires trivial additional overhead computation;

\

-

Results and analysis in different settings on four different kinematic formulations: velocity components vx and vy, acceleration components ax and ay, speed s and heading θ components, and steering δ and acceleration a (Section IV-B).

\

-

Analytical error bounds for the first and second-order kinematic formulations (section IV-C).

\

\

II. RELATED WORKS

A. Trajectory Forecasting for Traffic

Traffic trajectory forecasting is a popular task where the goal is to predict the short-term future trajectory of multiple agents in a traffic scene. Being able to predict the future positions and intents of each vehicle provides context for other modules in autonomous driving, such as path planning. Large, robust benchmarks such as the Waymo Motion Dataset [8], [9], Argoverse [10], and the NuScenes Dataset [11] have provided a standardized setting for advancements in the task, with leaderboards showing clear rankings for state-of-the-art models. Amongst the top performing architectures, most are based on Transformers for feature extraction [7], [12], [13], [14], [15]. Current SOTA models also model trajectory prediction probabilistically, as inspired by the use of GMMs in MultiPath [16].

\ One common theme amongst relevant state-of-the-art, however, is that works employing kinematic models for time-integrated trajectory rollouts typically only consider kinematic variables deterministically, which neglect the relation between kinematic input uncertainty and trajectory rollout uncertainty [1], [4], [17]. In our work, we present a method for use of kinematic priors which can be complemented with any previous work in trajectory forecasting. Our contribution can be implemented in any of the SOTA methods above, since it is a simple reformulation of the task with no additional information needed.

\

B. Physics-based Priors for Learning

Model-based learning has shown to be effective in many applications, especially in robotics and graphics. There are generally two approaches to using models of the real world: 1) learning a model of dynamics via a separate neural network [18], [19], [20], [21], or 2) using existing models of the real world via differentiable simulation [22], [23], [24], [25], [26], [27], [28].

\ In our method, we pursue the latter. Since we are not modeling complex systems such as cloth or fluid, simulation of traffic agent states require only a simple, fast, and differentiable update. In addition, since the kinematic models do not describe interactions between agents, the complexity of the necessary model is greatly reduced. In this paper, we hypothesize that modeling the simple kinematics (e.g., how a vehicle moves forward) with equations will allow for greater modeling expressivity on behavior.

\

:::info This paper is available on arxiv under ATTRIBUTION-NONCOMMERCIAL-NODERIVS 4.0 INTERNATIONAL license.

:::

\

You May Also Like

Horror Thriller ‘Bring Her Back’ Gets HBO Max Premiere Date

What next for bitcoin as BTC nears $68,000 on fresh US-Iran tensions