Dataset Splits, Vision Encoder, and Hyper-PELT Implementation Details

Table of Links

Abstract and 1. Introduction

-

Related Work

-

Preliminaries

-

Proposed Method

-

Experimental Setup

-

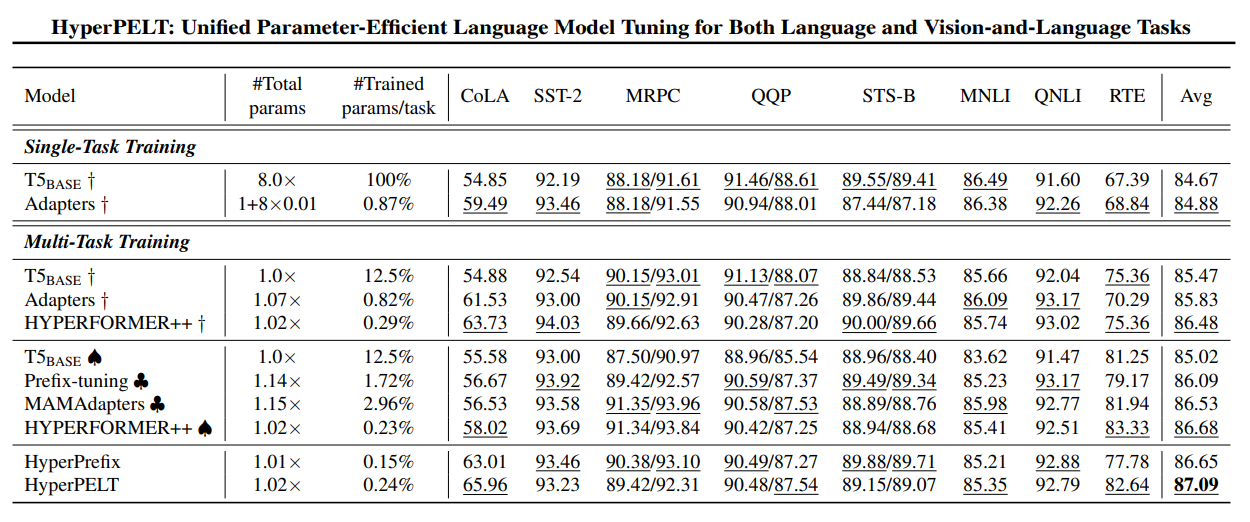

Results and Analysis

-

Discussion and Conclusion, and References

\

A. The Connection Between Prefix-tuning and Hypernetwork

B. Number of Tunable Parameters

C. Input-output formats

5. Experimental Setup

5.1. Datasets

Our framework is evaluated on the GLUE benchmark (Wang et al., 2019b) in terms of natural language understanding.

\ This benchmark covers multiple tasks of paraphrase detection (MRPC, QQP), sentiment classification (SST-2), natural language inference (MNLI, RTE, QNLI), and linguistic acceptability (CoLA). The original test sets are not publicly available, and following Zhang et al. (2021), for datasets fewer than 10K samples (RTE, MRPC, STS-B, CoLA), we split the original validation set into two halves, one for validation and the other for testing. For other larger datasets, we randomly split 1K samples from the training set as our validation data and test on the original validation set.

\ In addition, we evaluate the few-shot domain transfer performance on four tasks and datasets: 1) the natural language inference (NLI) datasets CB and 2) the question answering (QA) dataset BoolQ from SuperGLUE (Wang et al., 2019a); 3) the sentiment analysis datasets IMDB (Maas et al., 2011); and 4) the paraphrase detection dataset PAWS (Zhang et al., 2019). For CB and BoolQ, since the test set is not available, we split the validation set into two halves, one for validation and the other for testing. For IMDB, since the validation set is not available, we similarly split the test set to form validation. For PAWS, we report on the original test set.

\ To evaluate our framework on V&L tasks, we experiment on four datasets COCO (Lin et al., 2014), VQA (Goyal et al., 2017), VG-QA (Krishna et al., 2017) and GQA (Hudson & Manning, 2019). We further evaluate our framework on three datasets for multi-modal few-shot transfer learning: OKVQA (Marino et al., 2019); SNLI-VE (Xie et al., 2018).

5.2. Implementation Details

\ For evaluating our framework on vision-language scenarios, we follow Cho et al. (2021) to convert V&L tasks to a text generation format. We use ResNet101 as our vision encoder, and initialize it with CLIP (Radford et al., 2021) [3] pretrained weights. Input images are resized to 224 × 224

\

\ for the memory efficiency. We extract the 7 × 7 grid features produced by the last convolutional layer. The percentage of updated parameters is also reported as one metric for approach efficiency, and we do not take visual encoder into computation since it is frozen in our experiments. We count the number of tunable parameters and list the input-output formats of each task in the Appendix B and C.

\

:::info Authors:

(1) Zhengkun Zhang, with Equal contribution from Work is done at the internship of Noah’s Ark Lab, Huawei Technologies

(2) Wenya Guo and TKLNDST, CS, Nankai University, China ([email protected]);

(3) Xiaojun Meng, with Equal contribution from Noah’s Ark Lab, Huawei Technologies;

(4) Yasheng Wang, Noah’s Ark Lab, Huawei Technologies;

(5) Yadao Wang, Noah’s Ark Lab, Huawei Technologies;

(6) Xin Jiang, Noah’s Ark Lab, Huawei Technologies;

(7) Qun Liu, Noah’s Ark Lab, Huawei Technologies;

(8) Zhenglu Yang, TKLNDST, CS, Nankai University, China.

:::

:::info This paper is available on arxiv under CC BY 4.0 DEED license.

:::

[2] https://huggingface.co/t5-base

\ [3] https://github.com/openai/CLIP

You May Also Like

New Viral Presale on XRPL: DeXRP Surpassed $6.4 Million

SUI Price Consolidation Suggests Bullish Breakout Above $1.84