Solana Could Get A Turbo Boost As Firedancer Targets Block Restrictions

Solana’s performance push picked up fresh momentum this week as engineers behind Firedancer, the alternative high-performance validator client spearheaded by Jump, filed a new Solana Improvement Document (SIMD-0370) to remove the network’s block-level compute unit (CU) limit—a change they argue is now redundant after Alpenglow and would immediately translate into higher throughput and lower latency when demand spikes.

Next Turbo Boost For Solana

The pull request, authored by the “Firedancer Team” and opened on September 24, 2025, is explicitly framed as a “post-Alpenglow” proposal. In Alpenglow, voter nodes broadcast a SkipVote if they cannot execute a proposed block within the allotted time. Because slow blocks are automatically skipped, the authors contend that a separate protocol-enforced CU ceiling per block is unnecessary.

“In Alpenglow, voter nodes broadcast a SkipVote if they do not manage to execute a block in time… This SIMD therefore removes the block compute unit limit enforcement,” the document states, describing the limit as superfluous under the upgraded scheduling rules.

Beyond technical cleanliness, the authors pitch a sharper economic alignment. The current block-level CU cap, they argue, breaks incentives by capping capacity via protocol rather than hardware and software improvements. Removing it would let producers fill blocks up to what their machines can safely process and propagate, pushing client and hardware competition to the forefront.

“The capacity of the network is determined not by the capabilities of the hardware but by the arbitrary block compute unit limit,” they write, before outlining why lifting that lid would realign incentives for both validator clients and program developers.

Early code-review comments from core contributors and client teams underline both the near-term user impact and the boundaries of the change. One reviewer summarized the practical upside: “Removing the limit today has tangible benefits for the ecosystem and end users… without waiting for the future architecture of the network to be fleshed out.” Another emphasized that some block constraints would remain, citing a “maximum shred limit,” while others suggested the network should likely retain per-transaction CU limits for now and treat any change there as a separate, more far-reaching discussion.

Security and liveness considerations feature prominently. Reviewers asked the proposal to explicitly spell out why safety is preserved even if a block is too heavy to propagate in time; the Alpenglow answer is that such blocks are simply not voted in, i.e., they get skipped—maintaining forward progress without penalizing the network. The Firedancer authors concur that the decisive guardrail is the clock and propagation budget, not a static CU ceiling.

The proposal also addresses a frequent concern in throughput debates: coordination. If one block producer upgrades hardware aggressively while others lag, does the network risk churn from skipped blocks? One reviewer notes that overly ambitious producers already self-calibrate because missed blocks mean missed rewards, naturally limiting block size to what peers can accept in time. The document further argues that, with the CU limit gone, market forces govern capacity: producers and client teams that optimize execution, networking, and scheduling will win more blocks and fees, pushing the frontier outward as demand warrants.

Crucially, SIMD-0370 is future-compatible. Ongoing designs for multiple concurrent proposers—a long-term roadmap item for Solana—sometimes assume a block limit and sometimes do not. Reviewers stress that removing the current limit does not preclude concurrent-proposer architectures later; it simply unblocks improvements that “can be realized today.”

While the GitHub discussion supplies the technical meat, Anza—the Solana client team behind Agave—has also amplified the proposal on social channels, signaling broad client-team attention to the change and its user-facing implications.

What would change for users and developers if SIMD-0370 ships? In peak periods—airdrops, mints, market volatility—blocks could carry more compute as long as they can be executed and propagated within slot time, potentially raising sustained throughput and smoothing fee spikes.

For Solana developers, higher headroom and stronger incentives for client/hardware optimization could reduce tail latency for demanding workloads, albeit with the continuing need to optimize programs for parallelism and locality. For validators, the competitive edge would tilt even more toward execution efficiency, networking performance, and smart block-building policies that balance fee revenue against the risk of producing a block so heavy it gets skipped.

As with all SIMDs, the change is subject to community review, implementation, and deployment coordination across validator clients. But the direction is clear. Post-Alpenglow, Solana’s designers believe the slot-time budget is the real limiter.

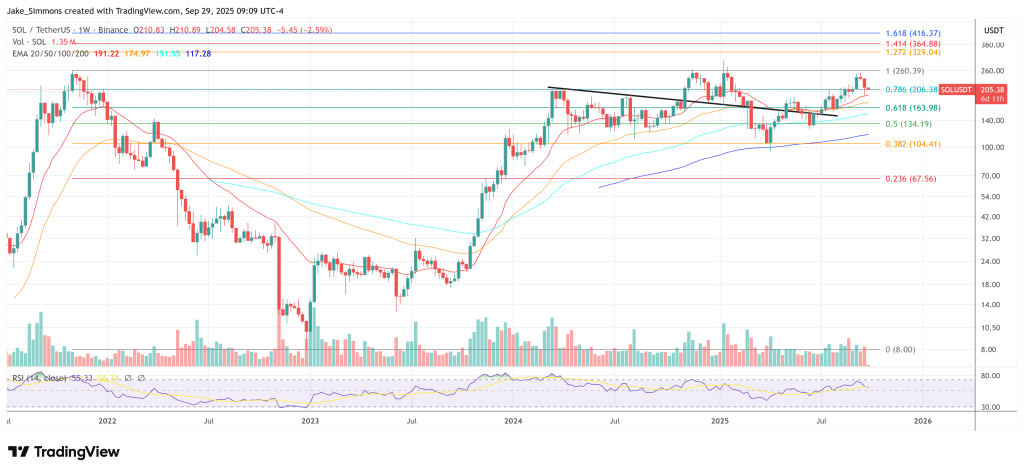

At press time, Solana traded at $205.38.

You May Also Like

Ethereum Price Prediction: ETH Targets $10,000 In 2026 But Layer Brett Could Reach $1 From $0.0058

UK and US Seal $42 Billion Tech Pact Driving AI and Energy Future