Using Graph Transformers to Predict Business Process Completion Times

:::info Authors:

(1) Keyvan Amiri Elyasi[0009 −0007 −3016 −2392], Data and Web Science Group, University of Mannheim, Germany ([email protected]);

(2) Han van der Aa[0000 −0002 −4200 −4937], Faculty of Computer Science, University of Vienna, Austria ([email protected]);

(3) Heiner Stuckenschmidt[0000 −0002 −0209 −3859], Data and Web Science Group, University of Mannheim, Germany ([email protected]).

:::

Table of Links

Abstract and 1. Introduction

-

Background and Related work

-

Preliminaries

-

PGTNet for Remaining Time Prediction

4.1 Graph Representation of Event Prefixes

4.2 Training PGTNet to Predict Remaining Time

-

Evaluation

5.1 Experimental Setup

5.2 Results

-

Conclusion and Future Work, and References

\ Abstract. We present PGTNet, an approach that transforms event logs into graph datasets and leverages graph-oriented data for training Process Graph Transformer Networks to predict the remaining time of business process instances. PGTNet consistently outperforms state-of-the-art deep learning approaches across a diverse range of 20 publicly available real-world event logs. Notably, our approach is most promising for highly complex processes, where existing deep learning approaches encounter difficulties stemming from their limited ability to learn control-flow relationships among process activities and capture long-range dependencies. PGTNet addresses these challenges, while also being able to consider multiple process perspectives during the learning process.

1 Introduction

Predictive process monitoring (PPM) aims to forecast the future behaviour of running business process instances, thereby enabling organizations to optimize their resource allocation and planning [17], as well as take corrective actions [7]. An important task in PPM is remaining time prediction, which strives to accurately predict the time until an active process instance will be completed. Precise estimations for remaining time are crucial for avoiding deadline violations, optimizing operational efficiency, and providing estimates to customers [13, 17].

\ A variety of approaches have been developed to tackle remaining time prediction, with recent works primarily being based on deep learning architectures. In this regard, approaches using deep neural networks are among the most prominent ones [15]. However, the predictive accuracy of these networks leaves considerable room for improvement. In particular, they face challenges when it comes to capturing long-range dependencies [2] and other control-flow relationships (such as loops and parallelism) between process activities [22], whereas they also struggle to harness information from additional process perspectives, such as case and event attributes [13]. Other architectures can overcome some of these individual challenges. For instance, the Transformer architecture can learn long-range dependencies [2], graph neural networks (GNNs) can explicitly incorporate control-flow structures into the learning process [22], and LSTM (long short-term memory) architectures can be used to incorporate (parts of) the data perspective [13]. However, so far, no deep learning approach can effectively deal with all of these challenges simultaneously.

\ Therefore, this paper introduces PGTNet, a novel approach for remaining time prediction that can tackle all these challenges at once. Specifically, our approach converts event data into a graph-oriented representation, which allows us to subsequently employ a neural network based on the general, powerful, scalable (GPS) Graph Transformer architecture [16] to make predictions. Graph Transformers (GTs) have shown impressive performance in various graph regression tasks [4, 10, 16] and their theoretical expressive power closely aligns with our objectives: they can deal with multi-perspective data (covering various process perspectives) and can effectively capture long-range dependencies and recognize local control-flow structures. GTs achieve these latter benefits through a combination of local message-passing neural networks (MPNNs) [6] and a global attention mechanism [18]. They employ sparse message-passing within their GNN blocks to learn local control-flow relationships among process activities, while their Transformer blocks attend to all events in the running process instance to capture the global context.

\ We evaluated the effectiveness of PGTNet for remaining time prediction using 20 publicly available real event logs. Our experiments show that our approach outperforms current state-of-the-art deep learning approaches in terms of accuracy and earliness of predictions. We also investigated the relationship between process complexity and the performance of PGTNet, which revealed that our approach particularly achieved superior predictive performance (compared to existing approaches) for highly complex, flexible processes.

\ The structure of the paper is outlined as follows: Section 2 covers background and related work, Section 3 presents preliminary concepts, Section 4 introduces our proposed approach for remaining time prediction, Section 5 discusses the experimental setup and key findings, and finally, Section 6 summarizes our contributions and suggests future research directions.

2 Background and Related work

This section briefly discusses related work on remaining time prediction and provides more details on Graph Transformers.

\ Remaining time prediction. Various approaches have been proposed for remaining time prediction, encompassing process-aware approaches relying on transition systems, stochastic Petri Nets, and queuing models, along with machine learning-based approaches [20]. In recent years, approaches based on deep learning have emerged as the foremost methods for predicting remaining time [15]. These approaches use different neural network architectures such as LSTMs [13, 17], Transformers [2], and GNNs [3].

\ Vector embedding and feature vectors, constituting the data inputs for Transformers and LSTMs, face a challenge in directly integrating control-flow relationships into the learning process. To overcome this constraint, event logs can be converted into graph data, which then acts as input for training a Graph Neural Network (GNN) [22]. GNNs effectively incorporate control-flow structures by aligning the computation graph with input data. Nevertheless, they suffer from over-smoothing and over-squashing problems [10], sharing similarities with LSTMs in struggling to learn long-range dependencies [16]. Moreover, existing graph-based predictive models face limitations due to the expressive capacity of their graph-oriented data inputs. Current graph representations of business process instances, either focus solely on the control-flow perspective [7, 19] or conceptualize events as nodes [3, 22], leading to a linear graph structure that adversely impacts the performance of a downstream GNN.

\ Graph Transformers. Inspired by the successful application of self-attention mechanism in natural language processing, two distinct solutions have emerged to address the limitations of GNNs. The first approach unifies GNN and Transformer modules in a single architecture, while the second compresses the graph structure into positional (PE) and structural (SE) embeddings. These embeddings are then added to the input before feeding it into the Transformer network [16]. Collectively known as Graph Transformers (GTs), both solutions aim to overcome the limitations of GNNs, by enabling information propagation across the graph through full connectivity [10, 16]. GTs also possess greater expressive power compared to conventional Transformers, as they can incorporate local context using sparse information obtained from the graph structure [4].

\ Building upon this theoretical foundation, we propose to convert event logs into graph datasets to enable remaining time prediction using a Process Graph Transformer Network (PGTNet), as discussed in the remainder.

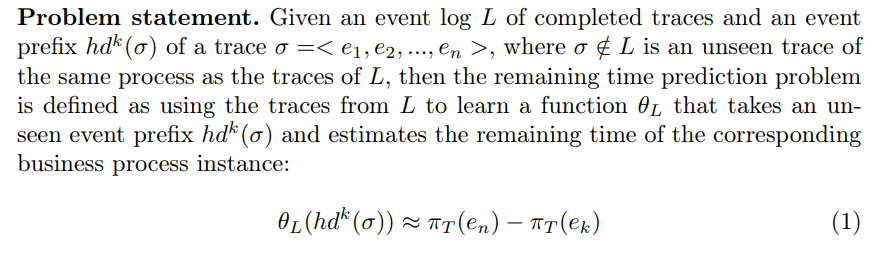

3 Preliminaries

This section presents essential concepts that will be used in the remainder.

\

\

\

\

\ \

:::info This paper is available on arxiv under CC BY-NC-ND 4.0 Deed (Attribution-Noncommercial-Noderivs 4.0 International) license.

:::

\

You May Also Like

Ripple Buyers Step In at $2.00 Floor on BTC’s Hover Above $91K

Jamie Dimon Says He Doesn't Like 'Debanking People,' Denies Targeting Trump Media: 'People Have To Grow Up'