Beyond the Prompt: Five Lessons from Anthropic on AI's Most Valuable Resource

The Hidden Challenge Beyond the Prompt

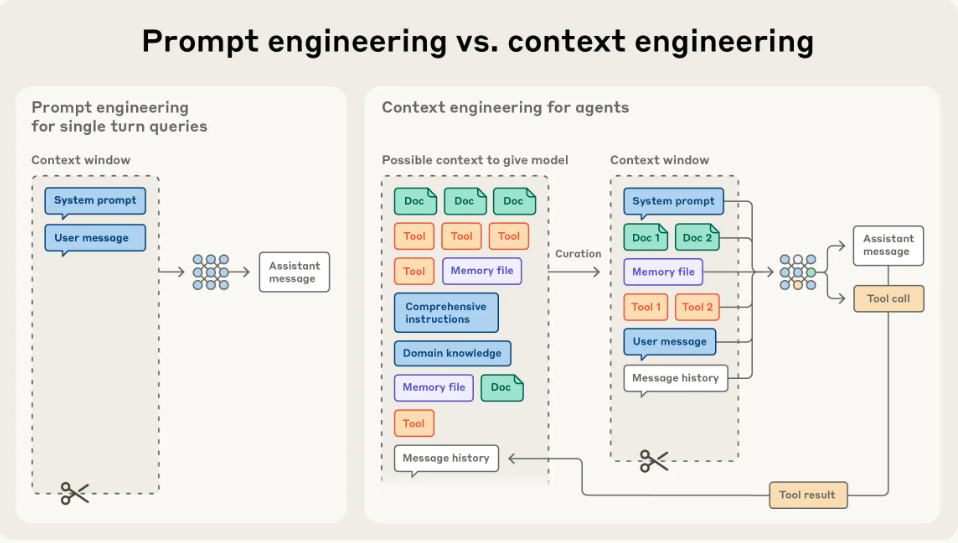

For the past few years, "prompt engineering" has dominated the conversation in applied AI. The focus has been on mastering the art of instruction; finding the perfect words and structure to elicit a desired response from a language model. But as developers move from simple, one-shot tasks to building complex, multi-step AI "agents," a more fundamental challenge has emerged: context engineering.

This shift marks a new phase in building with AI, moving beyond the initial command to managing the entire universe of information an AI sees at any given moment. As experts at Anthropic have framed it:

"Building with language models is becoming less about finding the right words and phrases for your prompts, and more about answering the broader question of 'what configuration of context is most likely to generate our model’s desired behavior?'"

Mastering this new art is critical for creating capable, reliable agents. This article reveals five of the most impactful and counter-intuitive lessons from Anthropic on how to engineer context effectively.

Takeaway 1: The Era of "Prompt Engineering" Is Evolving

Context engineering is the natural and necessary evolution of prompt engineering. As AI applications grow in complexity, the initial prompt is just one piece of a much larger puzzle.

The two concepts can be clearly distinguished:

\

- Prompt Engineering: Focuses on writing and organizing the initial set of instructions for a Large Language Model (LLM) to achieve an optimal outcome in a discrete task.

- Context Engineering: A broader, iterative process of curating the entire set of information an LLM has access to at any point during its operation. This includes the system prompt, available tools, external data, message history, and other elements like the Model Context Protocol (MCP).

\

Takeaway 2: More Context Can Actually Make an AI Dumber

Simply expanding an LLM's context window is not a perfect solution for building smarter agents. In fact, more context can sometimes degrade performance. This counter-intuitive phenomenon is known as "context rot."

This happens because LLMs, like humans with their limited working memory, operate with an "attention budget." This scarcity stems from the underlying transformer architecture, which creates a natural tension between the size of the context and the model's ability to maintain focus. This architecture allows every token to attend to every other, resulting in n² pairwise relationships. As context size increases, this ability gets stretched thin, and models often trained on shorter sequences show reduced precision in long-range reasoning.

This reality forces a critical shift in perspective: context is not an infinite resource to be filled but a precious, finite one that requires deliberate and careful curation.

Takeaway 3: The Golden Rule is "Less is More"

The guiding principle of effective context engineering is to find the minimum effective dose of information. This principle is best summarized as follows:

This "less is more" philosophy applies across all components of an agent's context:

\

- System Prompts: Prompts must find the "Goldilocks zone" or "right altitude." This "right altitude" avoids two common failure modes: at one extreme, "brittle if-else hardcoded prompts" that lack flexibility, and at the other, prompts that are "overly general or falsely assume shared context."

- Tools: Avoid bloated tool sets with overlapping functionality. The source offers a powerful heuristic: "If a human engineer can’t definitively say which tool should be used in a given situation, an AI agent can’t be expected to do better." Curating a minimal, unambiguous set of tools is therefore paramount.

- Examples: Instead of a "laundry list of edge cases," it is far more effective to provide a few "diverse, canonical examples" that clearly demonstrate the agent's expected behavior.

\

Takeaway 4: The Smartest Agents Mimic Human Memory, Not Supercomputers

Instead of trying to load all possible information into an agent's context window, the most effective approach is to retrieve it "just in time." This strategy involves building agents that can dynamically load data as needed, rather than having everything pre-loaded.

This method draws a direct parallel to human cognition. We don't memorize entire libraries; we use organizational systems like bookmarks, file systems, and notes to retrieve relevant information on demand. AI agents can be designed to do the same.

This strategy enables what the source calls "progressive disclosure." Agents incrementally discover relevant context through exploration, assembling understanding layer by layer. Each interaction including reading a file name, checking a timestamp provides signals that inform the next decision, allowing the agent to maintain focus on what's necessary without drowning in irrelevant information.

Two powerful examples illustrate this concept:

\

- Structured Note-Taking: An agent can maintain an external file, like NOTES.md or a to-do list, to track progress, dependencies, and key decisions on complex tasks. This persists memory outside the main context, which can be referenced as needed.

- The Pokémon Agent: An agent designed to play Pokémon used its own notes to track progress over thousands of steps. It remembered combat strategies, mapped explored regions, and tracked training goals coherently over many hours—a feat impossible if it had to keep every detail in its active context window.

\

Takeaway 5: Complex Problems Require an AI "Team"

For large, long-horizon tasks that exceed any single context window, a sub-agent architecture is a highly effective strategy. This model mirrors the structure of an effective human team.

The architecture works by having a main agent act as a coordinator or manager. This primary agent delegates focused tasks to specialized sub-agents, each with its own clean context window. The sub-agents perform deep work, such as technical analysis or information gathering, and may use tens of thousands of tokens in the process.

The key benefit is that each sub-agent returns only a "condensed, distilled summary" of its findings to the main agent. This keeps the primary agent's context clean, uncluttered, and focused on high-level strategy and synthesis. This sophisticated method for managing an AI's attention allows teams of agents to tackle problems of a scale and complexity that a single agent cannot.

Conclusion: Curating Attention is the Future

The art of building effective AI agents is undergoing a fundamental shift; from a discipline of simple instruction to one of sophisticated information and attention management. The core challenge is no longer just crafting the perfect prompt but thoughtfully curating what enters a model's limited attention budget at each step.

Even as models advance toward greater autonomy, the core principles of attention management and context curation will separate brittle, inefficient agents from resilient, high-performing ones. This is not just a technical best practice; it is the strategic foundation for the next generation of AI systems.

Ayrıca Şunları da Beğenebilirsiniz

SBI Shinsei Bank Moves Toward Multicurrency Tokenized Payments

Dogecoin Rally Sparks Meme Coin Frenzy